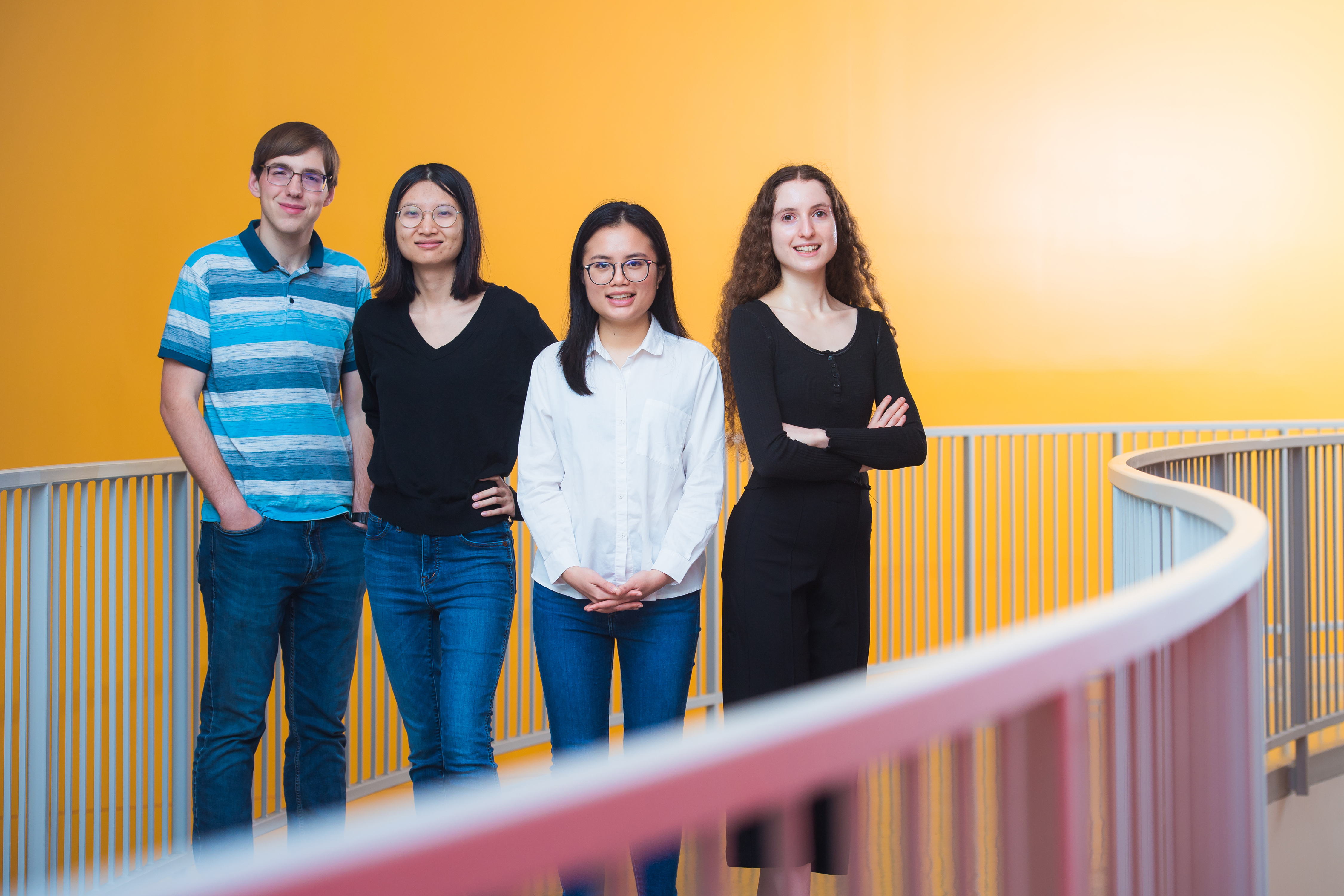

MIT undergraduate researchers Helena Merker, Harry Heiberger, and Linh Nguyen, and PhD student Tongtong Liu, exploit machine-learning techniques to determine the magnetic structure of materials.

Steve Nadis | Department of Nuclear Science and Engineering

Knowing the magnetic structure of crystalline materials is critical to many applications, including data storage, high-resolution imaging, spintronics, superconductivity, and quantum computing. Information of this sort, however, is difficult to come by. Although magnetic structures can be obtained from neutron diffraction and scattering studies, the number of machines that can support these analyses — and the time available at these facilities — is severely limited.

As a result, the magnetic structures of only about 1,500 materials worked out experimentally have been tabulated to date. Researchers have also predicted magnetic structures by numerical means, but lengthy calculations are required, even on large, state-of-the-art supercomputers. These calculations, moreover, become increasingly expensive, with power demands growing exponentially, as the size of the crystal structures under consideration goes up.

Now, researchers at MIT, Harvard University, and Clemson University — led by Mingda Li, MIT assistant professor of nuclear science and engineering, and Tess Smidt, MIT assistant professor of electrical engineering and computer science — have found a way to streamline this process by employing the tools of machine learning. “This might be a quicker and cheaper approach,” Smidt says.

The team’s results were recently published in the journal iScience. One unusual feature of this paper, apart from its novel findings, is that its first authors are three MIT undergraduates — Helena Merker, Harry Heiberger, and Linh Nguyen — plus one PhD student, Tongtong Liu.

Merker, Heiberger, and Nguyen joined the project as first-years in fall 2020, and they were given a sizable challenge: to design a neural network that can predict the magnetic structure of crystalline materials. They did not start from scratch, however, making use of “equivariant Euclidean neural networks” that were co-invented by Smidt in 2018. The advantage of this kind of network, Smidt explains, “is that we won’t get a different prediction for the magnetic order if a crystal is rotated or translated, which we know should not affect the magnetic properties.” That feature is especially helpful for examining 3D materials.

The elements of structure

The MIT group drew upon a database of nearly 150,000 substances compiled by the Materials Project at the Lawrence Berkeley National Laboratory, which provided information concerning the arrangement of atoms in the crystal lattice. The team used this input to assess two key properties of a given material: magnetic order and magnetic propagation.

Figuring out the magnetic order involves classifying materials into three categories: ferromagnetic, antiferromagnetic, and nonmagnetic. The atoms in a ferromagnetic material act like little magnets with their own north and south poles. Each atom has a magnetic moment, which points from its south to north pole. In a ferromagnetic material, Liu explains, “all the atoms are lined up in the same direction — the direction of the combined magnetic field produced by all of them.” In an antiferromagnetic material, the magnetic moments of the atoms point in a direction opposite to that of their neighbors — canceling each other out in an orderly pattern that yields zero magnetization overall. In a nonmagnetic material, all the atoms could be nonmagnetic, having no magnetic moments whatsoever. Or the material could contain magnetic atoms, but their magnetic moments would point in random directions so that the net result, again, is zero magnetism.

The concept of magnetic propagation relates to the periodicity of a material’s magnetic structure. If you think of a crystal as a 3D arrangement of bricks, a unit cell is the smallest possible building block — the smallest number, and configuration, of atoms that can make up an individual “brick.” If the magnetic moments of every unit cell are aligned, the MIT researchers accorded the material a propagation value of zero. However, if the magnetic moment changes direction, and hence “propagates,” in moving from one cell to the next, the material is given a non-zero propagation value.

A network solution

So much for the goals. How can machine learning tools help achieve them? The students’ first step was to take a portion of the Materials Project database to train the neural network to find correlations between a material’s crystalline structure and its magnetic structure. The students also learned — through educated guesses and trial-and-error — that they achieved the best results when they included not just information about the atoms’ lattice positions, but also the atomic weight, atomic radius, electronegativity (which reflects an atom’s tendency to attract an electron), and dipole polarizability (which indicates how far the electron is from the atom’s nucleus). During the training process, a large number of so-called “weights” are repeatedly fine-tuned.

“A weight is like the coefficient m in the equation y = mx + b,” Heiberger explains. “Of course, the actual equation, or algorithm, we use is a lot messier, with not just one coefficient but perhaps a hundred; x, in this case, is the input data, and you choose m so that y is predicted most accurately. And sometimes you have to change the equation itself to get a better fit.”

Next comes the testing phase. “The weights are kept as-is,” Heiberger says, “and you compare the predictions you get to previously established values [also found in the Materials Project database].”

As reported in iScience, the model had an average accuracy of about 78 percent and 74 percent, respectively, for predicting magnetic order and propagation. The accuracy for predicting the order of nonmagnetic materials was 91 percent, even if the material contained magnetic atoms.

Charting the road ahead

The MIT investigators believe this approach could be applied to large molecules whose atomic structures are hard to discern and even to alloys, which lack crystalline structures. “The strategy there is to take as big a unit cell — as big a sample — as possible and try to approximate it as a somewhat disordered crystal,” Smidt says.

The current work, the authors wrote, represents one step toward “solving the grand challenge of full magnetic structure determination.” The “full structure” in this case means determining “the specific magnetic moments of every atom, rather than the overall pattern of the magnetic order,” Smidt explains.

“We have the math in place to take this on,” Smidt adds, “though there are some tricky details to be worked out. It’s a project for the future, but one that appears to be within reach.”

The undergraduates won’t participate in that effort, having already completed their work in this venture. Nevertheless, they all appreciated the research experience. “It was great to pursue a project outside the classroom that gave us the chance to create something exciting that didn’t exist before,” Merker says.

“This research, entirely led by undergraduates, started in 2020 when they were first-years. With Institute support from the ELO [Experiential Learning Opportunities] program and later guidance from PhD student Tongtong Liu, we were able to bring them together even while physically remote from each other. This work demonstrates how we can expand the first-year learning experience to include a real research product,” Li adds. “Being able to support this kind of collaboration and learning experience is what every educator strives for. It is wonderful to see their hard work and commitment result in a contribution to the field.”

“This really was a life-changing experience,” Nguyen agrees. “I thought it would be fun to combine computer science with the material world. That turned out to be a pretty good choice.”